The Next Training Set: Capturing Social Context for Embodied AI

By Roch Nakajima, CMO, Noitom Robotics

Why the social contract—not just safety rules—will decide where robots belong

In Hanoi, you step off the curb, keep a steady pace, and scooters stream around you like water finding its line. In Tokyo, you wait: red man, green man—no debate—even if the intersection is empty. Those are two perfectly rational ways to share a street, rooted in two different social contracts. Vietnam’s streets are dominated by two‑wheelers; “negotiated flow” is the everyday norm. Japan’s streets encode order and predictability; pedestrians are legally expected to wait, and most people do. Neither is better; each is coherent. That difference is exactly what our machines must learn to read.

Let’s be clear: the street‑crossing story is just an example. The bigger question is whether robots—and autonomous systems more broadly—can be imbued with a local social contract, moment to moment. Not just human–robot, but robot–robot too. Should a barista robot in Paris behave differently from one in Los Angeles? If we want trust and adoption, the answer is yes.

The science says culture matters

Social norms aren’t universal; they vary across “tight” cultures (strong norms, low tolerance of deviation) and “loose” cultures (weaker norms, higher tolerance). That’s not armchair sociology—it’s a cross‑national empirical result. And when you ask millions of people how autonomous vehicles should behave in moral edge cases, answers cluster by culture. If norms and expectations diverge, the AI’s “good behavior” must diverge too.

What counts as “manners” for machines

For robots, social contract shows up as space, timing, signals, and speech:

- How close you’re allowed to pass (personal space and lane formation).

- When you yield and when you “thread the needle.”

- Whether you make eye contact, nod, or use a voice cue before moving.

- Whether you chit‑chat at the counter or keep it brisk; whether you prompt for a tip at all.

Human–robot interaction research backs this up: people engage more, and complain less, when robots display culturally tuned social cues and navigation behavior. “Socially aware navigation” is a field precisely because rigid, one‑size‑fits‑all rules fail in real crowds.

Rules are not enough; robots need experience

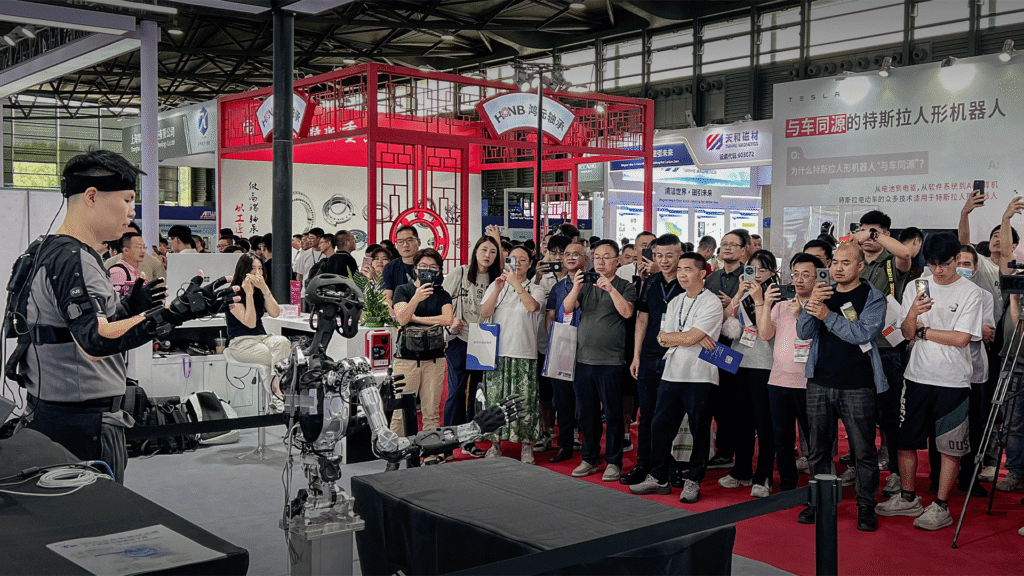

Traffic codes and safety margins are necessary; but they are not sufficient. Our industry has learned this the hard way: you can write impeccable rules and still get awkward, brittle behavior in the wild. What changes that is lived, multimodal experience—sight, sound, motion, touch—captured and aligned in real environments, then distilled into habits. As Dr. Tristan Dai put it, robots don’t just need more code; they need richer experience. That’s a data problem before it’s an algorithm problem.

At Noitom Robotics we’re focused on that layer: pipelines for high‑fidelity, synchronized human–environment interaction data and teleoperation loops that let robots practice local norms safely before they’re trusted to improvise. In embodied AI, turning motion into meaning is the bridge from “works-in-sim” to “belongs-in-our-world.”

Paris vs. Los Angeles: the barista test

In Paris, service is typically compris; tipping is modest and often optional, though card terminals have lately nudged behavior during tourist surges. In the United States, tipping is expected, yet disputed—and the prompts themselves can trigger frustration. A robot that treats these markets the same will feel tone‑deaf in one of them. Practical takeaway: design the payment and conversation flows for local norms—what you prompt, how you ask, and whether you make small talk—just as carefully as you froth the milk for the cappucino.

Robot–robot social contract is coming, too

This isn’t only about humans. When fleets of autonomous systems share space, they need conventions that go beyond hard protocols. We’re already seeing research on connected vehicles “talking” to negotiate merges and unprotected turns; in multi‑agent settings, norms can emerge—and sometimes drift—without explicit programming. If one city is “tight” and another is “loose,” robot–robot etiquette should adapt: how assertively to enter a gap, when to wave the other agent through, how to coordinate in language humans can audit.

A playbook for building local manners into robots

Here’s how we operationalize this without turning every deployment into a sociology PhD:

- Ship a cultural overlay, not just a safety stack.

Add an explicit “social behavior” layer to the robot OS—proxemics, pacing, yielding, gaze/speech patterns—that can be configured per city, venue, or even time of day. Treat it like a locale pack, versioned and testable. Social‑navigation research gives you the cues; your job is to ground them in local data. - Train on hyperlocal, multimodal data.

Capture what “polite” looks like there: the foot‑traffic flows, the soundscape, the micro‑gestures people use to negotiate space. Robots learn these subtleties from synchronized vision–motion–audio–force streams, not from rules alone. Build this into your data factory. - Keep humans in the loop—on purpose.

Like autonomous cars, most embodied systems will rely on remote assistance for a long time. Staff those “robot call centers” with local operators who can steer the robot toward the prevailing social contract and convert those interventions into training data. Make your operator‑to‑robot ratio a KPI you report and drive down over time. - Audit for cultural fit and moral risk.

Cross‑cultural studies show ethical preferences cluster by region. Create a deployment ethics checklist that aligns robot behaviors with local expectations—while guarding against codifying bias. Avoid “moral colonialism” by letting local norms shape defaults, with transparent overrides for safety and law. - Standardize a “civic handshake” for robot–robot courtesy.

Push your vendors (and competitors) toward open, human‑auditable negotiation channels (yes, including natural‑language summaries) so mixed fleets can cooperate—and so regulators can inspect how machines decided who went first.

One last word on Hanoi vs. Tokyo

Street‑crossing is just a clean way to visualize the point. Vietnam’s road ecology, with two‑wheelers making up the vast majority of vehicles, logically favors continuous, negotiated motion; Japan’s system prioritizes rule‑visible coordination and pedestrian signal discipline. Both are safe when everyone reads the same script. Our robots must learn to read the script that’s playing right here, right now.

Why this matters for us

Our job isn’t to hard‑code etiquette; it’s to capture it, distill it, and let robots practice it safely. That’s why our work emphasizes end‑to‑end teleoperation, motion‑to‑meaning pipelines, and deployable datasets that reflect real social texture—not just physics. It’s the difference between a robot that merely avoids people and a robot that fits in with them.

Get in touch

Curious about pilots, research collaborations, or custom integrations?

Email us at: contact@noitomrobotics.com